Preface

Disaster strikes often at the worst time possible, and hope is not a good strategy.

This document will cover how to set up Disaster Recovery of a Managed Kubernetes cluster using the backup tool Velero.

Information

The article uses version 1.14 of Velero as a reference and will use Mac Os and x86_64 for most of the steps. Installing on another operating system should be a similar process.

Pre-Requisites

Below are the requirements for continuing with the actions described here:

- An S3-compatible storage, such as Leaseweb Object-storage

- A bucket created specifically to store your backup

- A key-id and a secret-key with access to the bucket

- An S3 URL, assumed https://nl.object-storage.io

- A region, assumed nl-01

- Some command-line experience

- A Leaseweb Managed Kubernetes Cluster

Velero

Velero is a VMware-backed open-source project that can be used as a backup and restore tool and a Disaster Recovery (DR) tool. Velero can also be employed to migrate Kubernetes cluster Resources and PersistentVolumes. The tool also does backup and restores persistent data contained in PersistentVolumes using either file system backup, kopia (default), restic or with snapshot capability native to the platform with a snapshot controller.

Warning

Leaseweb currently does not support snapshotter on Leaseweb Managed Kubernetes.

We recommend using Kopia to back up PersistentVolumes.

Installing and using Velero consists of the following simple steps :

- Install a Command-line tool to be able to talk with Velero

- Generate a config file

- Deploy Velero components in the Leaseweb Managed Kubernetes cluster using kubectl apply

- Perform a backup and restore operation

Installation

Following the documentation we can install the Velero Command-line tool on Linux, Mac Os X or even Windows.

Command-line tool

MacOs – with Homebrew

The easiest way is to leverage ‘brew‘ package manager using the following command:

$ brew install veleroFrom GitHub – Any Linux Distributions or MacOs

The following command allows you to download version 1.14 of Velero in a ‘.tar.gz’ format, extract the binary and move it to /usr/local/bin.

For this to work properly, \usr\local\bin should be present in the $PATH environment variable.

The latest release can be found at https://github.com/vmware-tanzu/velero/releases . The release format should match the architecture running the client.

Note that the architecture will be assumed as Linux on x86_64 for the rest of the article.

For that architecture, the right file is named ‘velero-v1.14.1-linux-amd64.tar.gz‘:

$ curl -L -O https://github.com/vmware-tanzu/velero/releases/download/v1.14.1/velero-v1.14.1-linux-amd64.tar.gz

$ tar -xvf velero-v1.14.1-linux-amd64.tar.gz

$ cd velero-v1.14.1-linux-amd64

$ mv velero /usr/local/bin/Validate the installation for both ‘brew’ and github installation:

$ velero version --client-only

Client:

Version: v1.14.1

Git commit: 8afe3cea8b7058f7baaf447b9fb407312c40d2daWhen omitting the '--client-only' parameter, you will get an error as it will also try to get the server version, which at this time is not installed:

$ velero version

An error occurred: error finding Kubernetes API server config in --kubeconfig, $KUBECONFIG, or in-cluster configuration: invalid configuration: no configuration has been provided, try setting KUBERNETES_MASTER environment variableWindows – Chocolatey

On Windows, please use ‘Chocolatey‘ to install Velero, as follows:

PS C:\Users\Administrator> choco install velero

Chocolatey v2.3.0

Installing the following packages:

velero

By installing, you accept licenses for the packages.

Downloading package from source 'https://community.chocolatey.org/api/v2/'

Progress: Downloading velero 1.14.1... 100%

velero v1.14.1 [Approved]

velero package files install completed. Performing other installation steps.

The package velero wants to run 'chocolateyinstall.ps1'.

Note: If you don't run this script, the installation will fail.

Note: To confirm automatically next time, use '-y' or consider:

choco feature enable -n allowGlobalConfirmation

Do you want to run the script?([Y]es/[A]ll - yes to all/[N]o/[P]rint): A

Downloading velero 64 bit

from 'https://github.com/vmware-tanzu/velero/releases/download/v1.14.1/velero-v1.14.1-windows-amd64.tar.gz'

Progress: 100% - Completed download of C:\Users\Administrator\AppData\Local\Temp\chocolatey\velero\1.14.1\velero-v1.14.1-windows-amd64.tar.gz (43.02 MB).

Download of velero-v1.14.1-windows-amd64.tar.gz (43.02 MB) completed.

Hashes match.

Extracting C:\Users\Administrator\AppData\Local\Temp\chocolatey\velero\1.14.1\velero-v1.14.1-windows-amd64.tar.gz to C:\ProgramData\chocolatey\lib\velero\tools...

C:\ProgramData\chocolatey\lib\velero\tools

Extracting C:\ProgramData\chocolatey\lib\velero\tools\velero-v1.14.1-windows-amd64.tar to C:\ProgramData\chocolatey\lib\velero\tools\...

C:\ProgramData\chocolatey\lib\velero\tools\

ShimGen has successfully created a shim for velero.exe

The install of velero was successful.

Deployed to 'C:\ProgramData\chocolatey\lib\velero\tools\'

Chocolatey installed 1/1 packages.

See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

Then, to validate the Windows installation

PS C:\Users\Administrator> velero version --client-only

Client:

Version: v1.14.1

Git commit: 8afe3cea8b7058f7baaf447b9fb407312c40d2daOptional installation of the autocompletion

This tool comes with an autocompletion that can be installed after the Command-line client installation.

The instructions to install the autocompletion are available in this document and are considered out of the scope of this article.

Server Installation

Once the command-line tool is installed, Velero needs to be installed in the cluster before being able to take a first Backup.

The ‘velero install‘ command-line command or a Helm Chart can be used to install the operator.

CLI Installation

This article requires S3-compatible storage with a pre-created bucket and proper access setup.

For this document, we will use ‘backup-velero‘ as the name of the bucket. For further details on how to create a bucket, see the object storage overview article in the Leaseweb Knowledge Base:

Create the following secret-file ‘credentials-velero' with the following content: replace the placeholder with the access key-id and access key, those credentials will be used by Velero to perform authentication against an S3 repository.

The Leaseweb Object storage can provide you with an S3-compatible endpoint to store your backups.

[default]

aws_access_key_id = <KEY_ID>

aws_secret_access_key = <SECRET_KEY>Once the file is created, fine-tune the parameters below. Running the command ‘velero install‘ as follows will create a file ‘velero-installation.yaml‘:

velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.10.0 \

--bucket backup-velero \

--secret-file credentials-velero \

--use-node-agent \

--default-volumes-to-fs-backup \

--backup-location-config checksumAlgorithm="",region=nl-01,s3ForcePathStyle="true",s3Url=https://nl.object-storage.io \

-o yaml \

--dry-run > velero-installation.yaml

# Explanation of the different parameter on the command line

--provider aws # this is provider use for backing up the data

--plugins velero/velero-plugin-for-aws:v1.10.1 # Plugin container images to install into the Velero Deployment. This plugins enable use of S3 compatible object-storage

--bucket backup-velero # Name of the object storage bucket where backups should be stored

--secret-file credentials-velero # File containing credentials for backup and volume provider. If not specified, --no-secret must be used for confirmation. Optional.

--use-node-agent # Create Velero node-agent daemonset. Optional. Velero node-agent hosts Velero modules that need to run in one or more nodes(i.e. Restic, Kopia)

--default-volumes-to-fs-backup # Bool flag to configure Velero server to use pod volume file system backup by default for all volumes on all backups. Optional.

--backup-location-config checksumAlgorithm="",region=nl-01,s3ForcePathStyle="true",s3Url=https://nl.object-storage.io # Configuration to use for the backup storage location. Format is key1=value1,key2=value2

-o yaml # Output display format. For create commands, display the object but do not send it to the server. Valid formats are 'table', 'json', and 'yaml'. 'table' is not valid for the install command.

--dry-run # Generate resources, but don't send them to the cluster. Use with -o. Optional.The file ‘velero-installation.yaml' can now be used to install Velero on the cluster:

$ kubectl create -f velero-installation.yaml

customresourcedefinition.apiextensions.k8s.io/backuprepositories.velero.io created

customresourcedefinition.apiextensions.k8s.io/backups.velero.io created

customresourcedefinition.apiextensions.k8s.io/backupstoragelocations.velero.io created

customresourcedefinition.apiextensions.k8s.io/deletebackuprequests.velero.io created

customresourcedefinition.apiextensions.k8s.io/downloadrequests.velero.io created

customresourcedefinition.apiextensions.k8s.io/podvolumebackups.velero.io created

customresourcedefinition.apiextensions.k8s.io/podvolumerestores.velero.io created

customresourcedefinition.apiextensions.k8s.io/restores.velero.io created

customresourcedefinition.apiextensions.k8s.io/schedules.velero.io created

customresourcedefinition.apiextensions.k8s.io/serverstatusrequests.velero.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotlocations.velero.io created

customresourcedefinition.apiextensions.k8s.io/datadownloads.velero.io created

customresourcedefinition.apiextensions.k8s.io/datauploads.velero.io created

namespace/velero created

clusterrolebinding.rbac.authorization.k8s.io/velero created

serviceaccount/velero created

secret/cloud-credentials created

backupstoragelocation.velero.io/default created

volumesnapshotlocation.velero.io/default created

deployment.apps/velero created

daemonset.apps/node-agent createdThis results in all the components deployed:

# Resulting installation

$ kubectl get all -n velero -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/node-agent-7fmqx 1/1 Running 0 61s 10.244.1.191 k8s-0000001-worker-0-87qg9-zbwwg <none> <none>

pod/node-agent-h8gs6 1/1 Running 0 61s 10.244.0.242 k8s-0000001-worker-0-87qg9-5nscc <none> <none>

pod/velero-bfb7fb7dc-v7xnr 1/1 Running 0 61s 10.244.1.77 k8s-0000001-worker-0-87qg9-zbwwg <none> <none>

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE CONTAINERS IMAGES SELECTOR

daemonset.apps/node-agent 2 2 2 2 2 <none> 61s node-agent velero/velero:v1.14.1 name=node-agent

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/velero 1/1 1 1 61s velero velero/velero:v1.14.1 deploy=velero

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/velero-bfb7fb7dc 1 1 1 61s velero velero/velero:v1.14.1 deploy=velero,pod-template-hash=bfb7fb7dcWhen using the ‘velero version‘ command, the version of both the server and the client should now be visible:

$ velero version

Client:

Version: v1.14.1

Git commit: -

Server:

Version: v1.14.1We are now ready for backup and restore.

Helm installation

The first step is to add the Velero chart to Helm:

$ helm repo add vmware-tanzu https://vmware-tanzu.github.io/helm-charts

"vmware-tanzu" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "vmware-tanzu" chart repository

Update Complete. ⎈Happy Helming!⎈Using the helm upgrade ‘--install‘, we can deploy Velero components in the Leaseweb Managed Kubernetes cluster.

$ helm upgrade --install velero vmware-tanzu/velero \

--namespace velero \

--create-namespace \

--set-file credentials.secretContents.cloud=credentials-velero \

--set configuration.backupStorageLocation[0].name=velero \

--set configuration.backupStorageLocation[0].provider=aws \

--set configuration.backupStorageLocation[0].bucket=backup-velero \

--set configuration.backupStorageLocation[0].config.checksumAlgorithm="" \

--set configuration.backupStorageLocation[0].config.region=nl-01 \

--set configuration.backupStorageLocation[0].config.s3ForcePathStyle="true" \

--set configuration.backupStorageLocation[0].config.s3Url="https://nl.object-storage.io" \

--set configuration.volumeSnapshotLocation[0].name=velero \

--set configuration.volumeSnapshotLocation[0].provider=aws \

--set configuration.volumeSnapshotLocation[0].config.region=nl-01 \

--set deployNodeAgent=true \

--set initContainers[0].name=velero-plugin-for-aws \

--set initContainers[0].image=velero/velero-plugin-for-aws:v1.10.0 \

--set initContainers[0].volumeMounts[0].mountPath=/target \

--set initContainers[0].volumeMounts[0].name=plugins

Release "velero" does not exist. Installing it now.

NAME: velero

LAST DEPLOYED: Thu Nov 7 08:10:48 2024

NAMESPACE: velero

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Check that the velero is up and running:

kubectl get deployment/velero -n velero

Check that the secret has been created:

kubectl get secret/velero -n velero

Once velero server is up and running you need the client before you can use it

1. wget https://github.com/vmware-tanzu/velero/releases/download/v1.14.1/velero-v1.14.1-darwin-amd64.tar.gz

2. tar -xvf velero-v1.14.1-darwin-amd64.tar.gz -C velero-client

More info on the official site: https://velero.io/docsAn alternative way to perform this is by downloading the ‘values.yaml‘ from velero’s github and customising the values.

Then kubectl can be used to validate the deployment:

$ kubectl get deployment/velero -n velero

NAME READY UP-TO-DATE AVAILABLE AGE

velero 1/1 1 1 109s

$ kubectl get secret/velero -n velero

NAME TYPE DATA AGE

velero Opaque 1 115sApplication

The application Kube Prometheus Stack will be used as an example to showcase the backup and restore functionality.

You can skip this section if the backed-up application is already deployed in the cluster.

‘Helm repo add‘ to install the repo :

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

"prometheus-community" has been added to your repositories

$ helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "prometheus-community" chart repository

Update Complete. ⎈Happy Helming!⎈Then, ‘helm upgrade --install‘ can be used to install the application from helm repos:

$ helm upgrade --install mon-stack prometheus-community/kube-prometheus-stack --create-namespace --namespace=monitoring

Release "mon-stack" does not exist. Installing it now.

NAME: mon-stack

LAST DEPLOYED: Wed Nov 6 08:59:40 2024

NAMESPACE: monitoring

STATUS: deployed

REVISION: 1

NOTES:

kube-prometheus-stack has been installed. Check its status by running:

kubectl --namespace monitoring get pods -l "release=mon-stack"

Visit https://github.com/prometheus-operator/kube-prometheus for instructions on how to create & configure Alertmanager and Prometheus instances using the Operator.The following commands allow us to verify the status of our stack deployment. Please verify that all is running:

$ kubectl --namespace monitoring get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-mon-stack-kube-prometheus-alertmanager-0 2/2 Running 0 19h 10.244.1.194 k8s-0000001-worker-0-87qg9-zbwwg <none> <none>

mon-stack-grafana-684984b764-hbvqh 3/3 Running 0 19h 10.244.0.113 k8s-0000001-worker-0-87qg9-5nscc <none> <none>

mon-stack-kube-prometheus-operator-cb59fb64c-pldf6 1/1 Running 0 19h 10.244.0.6 k8s-0000001-worker-0-87qg9-5nscc <none> <none>

mon-stack-kube-state-metrics-869cf9756-xl28r 1/1 Running 0 19h 10.244.0.215 k8s-0000001-worker-0-87qg9-5nscc <none> <none>

mon-stack-prometheus-node-exporter-qhwtc 1/1 Running 0 19h 10.1.2.83 k8s-0000001-worker-0-87qg9-zbwwg <none> <none>

mon-stack-prometheus-node-exporter-xcnxg 1/1 Running 0 19h 10.1.2.148 k8s-0000001-worker-0-87qg9-5nscc <none> <none>

prometheus-mon-stack-kube-prometheus-prometheus-0 2/2 Running 0 19h 10.244.0.226 k8s-0000001-worker-0-87qg9-5nscc <none> <none>Cluster Backup

Now that Velero is installed in the cluster and a backup application, make an initial backup:

$ velero backup create initial-backup

Backup request "initial-backup" submitted successfully.

Run `velero backup describe initial-backup` or `velero backup logs initial-backup` for more details.‘velero backup describe‘ can be used to validate the backup, and the option ‘--details‘ can be used to get more information about the status:

$ velero backup describe initial-backup

Name: initial-backup

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/resource-timeout=10m0s

velero.io/source-cluster-k8s-gitversion=v1.29.7

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=29

Phase: Completed

Namespaces:

Included: *

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Or label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

Snapshot Move Data: false

Data Mover: velero

TTL: 720h0m0s

CSISnapshotTimeout: 10m0s

ItemOperationTimeout: 4h0m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2024-11-06 10:10:19 -0500 EST

Completed: 2024-11-06 10:11:03 -0500 EST

Expiration: 2024-12-06 10:10:19 -0500 EST

Total items to be backed up: 593

Items backed up: 593

Backup Volumes:

Velero-Native Snapshots: <none included>

CSI Snapshots: <none included>

Pod Volume Backups - kopia (specify --details for more information):

Completed: 16

HooksAttempted: 0

HooksFailed: 0A list of of resources and data that that was backed up is available. We also see in the ‘kopia backups‘ the file backup for volumes.

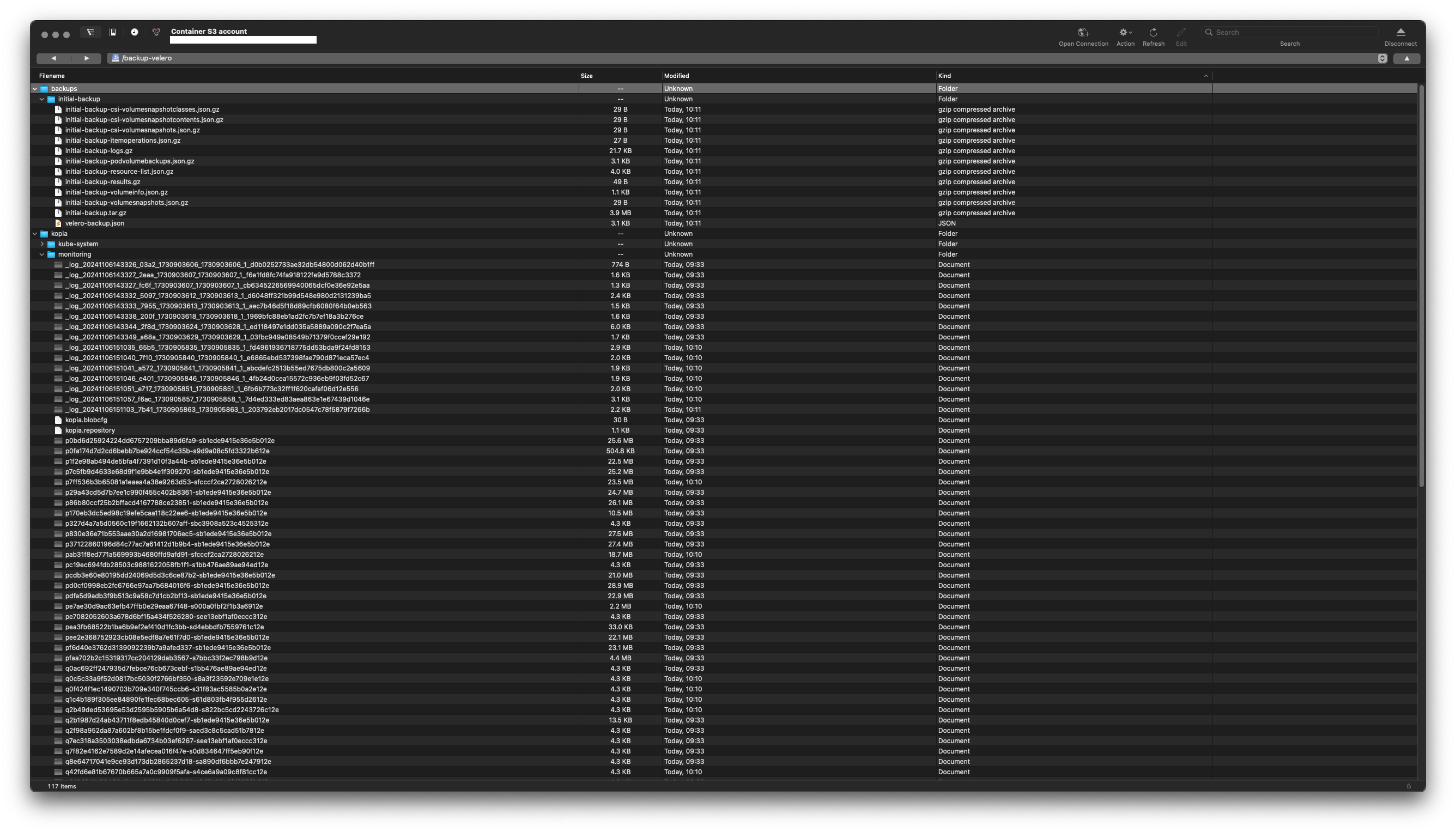

s3cmd command-line tool, or a Graphical User interface like Cyberduck, can be used to list the resources and files that were backed.

s3cmd ls s3://backup-velero/ –recursive (Click to expand)

2024-11-06 15:11 29 s3://backup-velero/backups/initial-backup/initial-backup-csi-volumesnapshotclasses.json.gz

2024-11-06 15:11 29 s3://backup-velero/backups/initial-backup/initial-backup-csi-volumesnapshotcontents.json.gz

2024-11-06 15:11 29 s3://backup-velero/backups/initial-backup/initial-backup-csi-volumesnapshots.json.gz

2024-11-06 15:11 27 s3://backup-velero/backups/initial-backup/initial-backup-itemoperations.json.gz

2024-11-06 15:11 21K s3://backup-velero/backups/initial-backup/initial-backup-logs.gz

2024-11-06 15:11 3K s3://backup-velero/backups/initial-backup/initial-backup-podvolumebackups.json.gz

2024-11-06 15:11 3K s3://backup-velero/backups/initial-backup/initial-backup-resource-list.json.gz

2024-11-06 15:11 49 s3://backup-velero/backups/initial-backup/initial-backup-results.gz

2024-11-06 15:11 1138 s3://backup-velero/backups/initial-backup/initial-backup-volumeinfo.json.gz

2024-11-06 15:11 29 s3://backup-velero/backups/initial-backup/initial-backup-volumesnapshots.json.gz

2024-11-06 15:11 3M s3://backup-velero/backups/initial-backup/initial-backup.tar.gz

2024-11-06 15:11 3K s3://backup-velero/backups/initial-backup/velero-backup.json

2024-11-06 14:33 776 s3://backup-velero/kopia/kube-system/_log_20241106143324_35d0_1730903604_1730903604_1_c93b003ee0c85927321e66a59fb2cded

2024-11-06 14:33 8K s3://backup-velero/kopia/kube-system/_log_20241106143325_e619_1730903605_1730903606_1_bd1c371641694bb5662fd00b1b0e2728

2024-11-06 14:33 1514 s3://backup-velero/kopia/kube-system/_log_20241106143325_e84d_1730903605_1730903606_1_668e41416ab94bf9bc35b44b280d67a3

2024-11-06 14:33 1348 s3://backup-velero/kopia/kube-system/_log_20241106143331_a2e1_1730903611_1730903611_1_3704a81232aea5766f4f871341b26cf3

2024-11-06 14:33 1473 s3://backup-velero/kopia/kube-system/_log_20241106143331_b99f_1730903611_1730903611_1_d52198d3ce2854c9d5b28bef92009968

2024-11-06 14:33 1642 s3://backup-velero/kopia/kube-system/_log_20241106143336_5064_1730903616_1730903617_1_3483c6e1de37d4a141fbd7e5a9ca63cd

2024-11-06 15:10 1548 s3://backup-velero/kopia/kube-system/_log_20241106151023_2e9c_1730905823_1730905824_1_2936d9a371e690f5f104af528b93da00

2024-11-06 15:10 1654 s3://backup-velero/kopia/kube-system/_log_20241106151024_2577_1730905824_1730905824_1_c0c41436ae85ebb04968878f58965532

2024-11-06 15:10 2K s3://backup-velero/kopia/kube-system/_log_20241106151029_7cd4_1730905829_1730905829_1_81d092475bfe28a72b74ef2edd9ca8b3

2024-11-06 15:10 1834 s3://backup-velero/kopia/kube-system/_log_20241106151029_ccda_1730905829_1730905830_1_0aa309be08108dcfdc28276337d20945

2024-11-06 15:10 1867 s3://backup-velero/kopia/kube-system/_log_20241106151034_0c45_1730905834_1730905835_1_0d62b2a5228c3e3899add23b95f8a517

2024-11-06 14:33 30 s3://backup-velero/kopia/kube-system/kopia.blobcfg

2024-11-06 14:33 1075 s3://backup-velero/kopia/kube-system/kopia.repositoryCluster Restore

Warning

To be able to restore any object(s) or volume(s) it must not be present in the k8s cluster. Velero will skip any restoration of object(s) and volume(s) it sees during the restoration process. Even if the data is different, it will not overwrite it.

Disaster strike

To simulate a disaster event, the monitoring stack is deleted “by mistake”:

$ helm delete mon-stack -n monitoring

release "mon-stack" uninstalled

$ kubectl get all -n monitoring -owide

No resources found in monitoring namespace.

$ kubectl delete ns monitoring

namespace "monitoring" deleted

$ kubectl get ns

NAME STATUS AGE

default Active 21h

kube-node-lease Active 21h

kube-public Active 21h

kube-system Active 21h

velero Active 66mRestoring

The following command ‘velero restore create initial-restore --from-backup initial-backup‘ will create a restore based on a previously created backup, in our example initial-backup:

$ velero restore create initial-restore --from-backup initial-backup

Restore request "initial-restore" submitted successfully.

Run `velero restore describe initial-restore` or `velero restore logs initial-restore` for more details.Similar to the backup process, there is a describe command to follow and see the restore process.

velero restore describe initial-restore (Click to expand)

$ velero restore describe initial-restore

velero restore describe initial-restore --details

Name: initial-restore

Namespace: velero

Labels: <none>

Annotations: <none>

Phase: Completed

Total items to be restored: 559

Items restored: 559

Started: 2024-11-06 15:04:54 -0500 EST

Completed: 2024-11-06 15:05:17 -0500 EST

Warnings:

Velero: <none>

Cluster: could not restore, CustomResourceDefinition "alertmanagerconfigs.monitoring.coreos.com" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "alertmanagers.monitoring.coreos.com" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "backuprepositories.velero.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "backups.velero.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "backupstoragelocations.velero.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliumcidrgroups.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliumclusterwidenetworkpolicies.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliumendpoints.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliumexternalworkloads.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliumidentities.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CustomResourceDefinition "ciliuml2announcementpolicies.cilium.io" already exists. Warning: the in-cluster version is different than the backed-up version

[...]

Namespaces:

monitoring: could not restore, ConfigMap "kube-root-ca.crt" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CiliumEndpoint "alertmanager-mon-stack-kube-prometheus-alertmanager-0" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CiliumEndpoint "mon-stack-grafana-684984b764-p6zpc" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CiliumEndpoint "mon-stack-kube-prometheus-operator-cb59fb64c-z99wc" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, CiliumEndpoint "mon-stack-kube-state-metrics-869cf9756-rstfz" already exists. Warning: the in-cluster version is different than the backed-up version

velero: could not restore, Pod "node-agent-5lvs4" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "node-agent-n9v4r" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "velero-bfb7fb7dc-97rf4" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, BackupStorageLocation "default" already exists. Warning: the in-cluster version is different than the backed-up version

default: could not restore, Service "kubernetes" already exists. Warning: the in-cluster version is different than the backed-up version

kube-node-lease: could not restore, Lease "k8s-0000001-worker-0-wbkxn-b2ttm" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Lease "k8s-0000001-worker-0-wbkxn-fd6mj" already exists. Warning: the in-cluster version is different than the backed-up version

kube-system: could not restore, Pod "cilium-59rjg" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "cilium-kw86v" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "cilium-operator-6465748764-gbmrt" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "cilium-operator-6465748764-hngrd" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "cloudstack-csi-node-7zkrn" already exists. Warning: the in-cluster version is different than the backed-up version

could not restore, Pod "cloudstack-csi-node-crwmc" already exists. Warning: the in-cluster version is different than the backed-up version

[...]

Backup: initial-backup

Namespaces:

Included: all namespaces found in the backup

Excluded: <none>

Resources:

Included: *

Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io, csinodes.storage.k8s.io, volumeattachments.storage.k8s.io, backuprepositories.velero.io

Cluster-scoped: auto

Namespace mappings: <none>

Label selector: <none>

Or label selector: <none>

Restore PVs: auto

kopia Restores:

Completed:

monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-0: alertmanager-mon-stack-kube-prometheus-alertmanager-db, config-out

monitoring/mon-stack-grafana-684984b764-p6zpc: sc-dashboard-volume, sc-datasources-volume, storage

monitoring/prometheus-mon-stack-kube-prometheus-prometheus-0: config-out, prometheus-mon-stack-kube-prometheus-prometheus-db

CSI Snapshot Restores: <none included>

Existing Resource Policy: <none>

ItemOperationTimeout: 4h0m0s

Preserve Service NodePorts: auto

Uploader config:

HooksAttempted: 0

HooksFailed: 0

Resource List:

apiextensions.k8s.io/v1/CustomResourceDefinition:

- alertmanagerconfigs.monitoring.coreos.com(failed)

- alertmanagers.monitoring.coreos.com(failed)

- backuprepositories.velero.io(failed)

- backups.velero.io(failed)

- backupstoragelocations.velero.io(failed)

- ciliumcidrgroups.cilium.io(failed)

- ciliumclusterwidenetworkpolicies.cilium.io(failed)

- ciliumendpoints.cilium.io(failed)

- ciliumexternalworkloads.cilium.io(failed)

- ciliumidentities.cilium.io(failed)

- ciliuml2announcementpolicies.cilium.io(failed)

- ciliumloadbalancerippools.cilium.io(failed)

- ciliumnetworkpolicies.cilium.io(failed)

- ciliumnodeconfigs.cilium.io(failed)

- ciliumnodes.cilium.io(failed)

- ciliumpodippools.cilium.io(failed)

- datadownloads.velero.io(failed)

- datauploads.velero.io(failed)

- deletebackuprequests.velero.io(failed)

- downloadrequests.velero.io(failed)

- podmonitors.monitoring.coreos.com(failed)

- podvolumebackups.velero.io(failed)

- podvolumerestores.velero.io(failed)

- probes.monitoring.coreos.com(failed)

- prometheusagents.monitoring.coreos.com(failed)

- prometheuses.monitoring.coreos.com(failed)

- prometheusrules.monitoring.coreos.com(failed)

- restores.velero.io(failed)

- schedules.velero.io(failed)

- scrapeconfigs.monitoring.coreos.com(failed)

- serverstatusrequests.velero.io(failed)

- servicemonitors.monitoring.coreos.com(failed)

- thanosrulers.monitoring.coreos.com(failed)

- volumesnapshotlocations.velero.io(failed)

apiregistration.k8s.io/v1/APIService:

- v1.(skipped)

- v1.admissionregistration.k8s.io(skipped)

- v1.apiextensions.k8s.io(skipped)

- v1.apps(skipped)

- v1.authentication.k8s.io(skipped)

- v1.authorization.k8s.io(skipped)

- v1.autoscaling(skipped)

- v1.batch(skipped)

- v1.certificates.k8s.io(skipped)

- v1.coordination.k8s.io(skipped)

- v1.discovery.k8s.io(skipped)

- v1.events.k8s.io(skipped)

- v1.flowcontrol.apiserver.k8s.io(skipped)

- v1.monitoring.coreos.com(skipped)

- v1.networking.k8s.io(skipped)

- v1.node.k8s.io(skipped)

- v1.policy(skipped)

- v1.rbac.authorization.k8s.io(skipped)

- v1.scheduling.k8s.io(skipped)

- v1.storage.k8s.io(skipped)

- v1.velero.io(skipped)

- v1alpha1.monitoring.coreos.com(skipped)

- v1beta3.flowcontrol.apiserver.k8s.io(skipped)

- v2.autoscaling(skipped)

- v2.cilium.io(skipped)

- v2alpha1.cilium.io(skipped)

- v2alpha1.velero.io(skipped)

apps/v1/ControllerRevision:

- kube-system/cilium-7447555dc7(skipped)

- kube-system/cloudstack-csi-node-8f4898fb9(skipped)

- kube-system/konnectivity-agent-6658548c64(skipped)

- kube-system/konnectivity-agent-764cc64f94(skipped)

- kube-system/kube-proxy-57d977457b(skipped)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-859c4b768c(created)

- monitoring/mon-stack-prometheus-node-exporter-66b8d7bb78(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-5cd466bb9(created)

- velero/node-agent-6fb8c698f(skipped)

apps/v1/DaemonSet:

- kube-system/cilium(skipped)

- kube-system/cloudstack-csi-node(skipped)

- kube-system/konnectivity-agent(skipped)

- kube-system/kube-proxy(skipped)

- monitoring/mon-stack-prometheus-node-exporter(created)

- velero/node-agent(skipped)

apps/v1/Deployment:

- kube-system/cilium-operator(skipped)

- kube-system/coredns(skipped)

- kube-system/hubble-relay(skipped)

- kube-system/hubble-ui(skipped)

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-operator(created)

- monitoring/mon-stack-kube-state-metrics(created)

- velero/velero(skipped)

apps/v1/ReplicaSet:

- kube-system/cilium-operator-6465748764(skipped)

- kube-system/coredns-54ff9b9dd9(skipped)

- kube-system/hubble-relay-5446cbb587(skipped)

- kube-system/hubble-ui-647f4487ff(skipped)

- monitoring/mon-stack-grafana-684984b764(created)

- monitoring/mon-stack-kube-prometheus-operator-cb59fb64c(created)

- monitoring/mon-stack-kube-state-metrics-869cf9756(created)

- velero/velero-bfb7fb7dc(skipped)

apps/v1/StatefulSet:

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus(created)

certificates.k8s.io/v1/CertificateSigningRequest:

- csr-6qchb(skipped)

- csr-98lbt(skipped)

- csr-cpfh7(skipped)

- csr-ssznw(skipped)

cilium.io/v2/CiliumEndpoint:

- kube-system/cloudstack-csi-node-7zkrn(skipped)

- kube-system/cloudstack-csi-node-crwmc(skipped)

- kube-system/coredns-54ff9b9dd9-8qftm(skipped)

- kube-system/coredns-54ff9b9dd9-r4wgq(skipped)

- kube-system/hubble-relay-5446cbb587-9d8fb(skipped)

- kube-system/hubble-ui-647f4487ff-s6kpj(skipped)

- kube-system/konnectivity-agent-btbpc(skipped)

- kube-system/konnectivity-agent-jsjcb(skipped)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-0(failed)

- monitoring/mon-stack-grafana-684984b764-p6zpc(failed)

- monitoring/mon-stack-kube-prometheus-operator-cb59fb64c-z99wc(failed)

- monitoring/mon-stack-kube-state-metrics-869cf9756-rstfz(failed)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-0(created)

- velero/node-agent-5lvs4(skipped)

- velero/node-agent-n9v4r(skipped)

- velero/velero-bfb7fb7dc-97rf4(skipped)

cilium.io/v2/CiliumIdentity:

- 14398(skipped)

- 1618(skipped)

- 26239(skipped)

- 29454(skipped)

- 32942(skipped)

- 4130(skipped)

- 4891(skipped)

- 5103(skipped)

- 57859(skipped)

- 63494(skipped)

- 9813(skipped)

cilium.io/v2/CiliumNode:

- k8s-0000001-worker-0-wbkxn-b2ttm(skipped)

- k8s-0000001-worker-0-wbkxn-fd6mj(skipped)

coordination.k8s.io/v1/Lease:

- kube-node-lease/k8s-0000001-worker-0-wbkxn-b2ttm(failed)

- kube-node-lease/k8s-0000001-worker-0-wbkxn-fd6mj(failed)

- kube-system/apiserver-jkiuzhixuimaxsllsunvge3yz4(failed)

- kube-system/apiserver-kjsng54bn5fdvegw45o5ypoz4e(failed)

- kube-system/apiserver-kpfmop23owmvkn7yyumytvdpje(skipped)

- kube-system/apiserver-n37cw4zqmgejclud7fbtc224im(skipped)

- kube-system/cilium-operator-resource-lock(failed)

- kube-system/cloud-controller-manager(failed)

- kube-system/csi-cloudstack-apache-org(failed)

- kube-system/external-attacher-leader-csi-cloudstack-apache-org(failed)

- kube-system/external-resizer-csi-cloudstack-apache-org(failed)

- kube-system/kube-controller-manager(failed)

- kube-system/kube-scheduler(failed)

- kube-system/kubelet-csr-approver(failed)

discovery.k8s.io/v1/EndpointSlice:

- default/kubernetes(skipped)

- kube-system/hubble-peer-m4cv7(skipped)

- kube-system/hubble-relay-dwnq7(skipped)

- kube-system/hubble-ui-6pxk9(skipped)

- kube-system/kube-dns-8v9rn(skipped)

- kube-system/mon-stack-kube-prometheus-coredns-jnkp4(created)

- kube-system/mon-stack-kube-prometheus-kubelet-25ccg(skipped)

- monitoring/alertmanager-operated-4pl44(created)

- monitoring/mon-stack-grafana-pfx8b(created)

- monitoring/mon-stack-kube-prometheus-alertmanager-jkwqp(created)

- monitoring/mon-stack-kube-prometheus-operator-fxhwv(created)

- monitoring/mon-stack-kube-prometheus-prometheus-g9khh(created)

- monitoring/mon-stack-kube-state-metrics-zvqcb(created)

- monitoring/mon-stack-prometheus-node-exporter-rf9zk(created)

- monitoring/prometheus-operated-5s9rb(created)

flowcontrol.apiserver.k8s.io/v1/FlowSchema:

- catch-all(skipped)

- endpoint-controller(skipped)

- exempt(skipped)

- global-default(skipped)

- kube-controller-manager(skipped)

- kube-scheduler(skipped)

- kube-system-service-accounts(skipped)

- probes(skipped)

- service-accounts(skipped)

- system-leader-election(skipped)

- system-node-high(skipped)

- system-nodes(skipped)

- workload-leader-election(skipped)

flowcontrol.apiserver.k8s.io/v1/PriorityLevelConfiguration:

- catch-all(skipped)

- exempt(skipped)

- global-default(skipped)

- leader-election(skipped)

- node-high(skipped)

- system(skipped)

- workload-high(skipped)

- workload-low(skipped)

monitoring.coreos.com/v1/Alertmanager:

- monitoring/mon-stack-kube-prometheus-alertmanager(created)

monitoring.coreos.com/v1/Prometheus:

- monitoring/mon-stack-kube-prometheus-prometheus(created)

monitoring.coreos.com/v1/PrometheusRule:

- monitoring/mon-stack-kube-prometheus-alertmanager.rules(created)

- monitoring/mon-stack-kube-prometheus-config-reloaders(created)

- monitoring/mon-stack-kube-prometheus-general.rules(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-cpu-usage-seconds(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-memory-cache(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-memory-rss(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-memory-swap(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-memory-working-se(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.container-resource(created)

- monitoring/mon-stack-kube-prometheus-k8s.rules.pod-owner(created)

- monitoring/mon-stack-kube-prometheus-kube-apiserver-availability.rules(created)

- monitoring/mon-stack-kube-prometheus-kube-apiserver-burnrate.rules(created)

- monitoring/mon-stack-kube-prometheus-kube-apiserver-histogram.rules(created)

- monitoring/mon-stack-kube-prometheus-kube-apiserver-slos(created)

- monitoring/mon-stack-kube-prometheus-kube-prometheus-general.rules(created)

- monitoring/mon-stack-kube-prometheus-kube-prometheus-node-recording.rules(created)

- monitoring/mon-stack-kube-prometheus-kube-state-metrics(created)

- monitoring/mon-stack-kube-prometheus-kubelet.rules(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-apps(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-resources(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-storage(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-system(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-system-apiserver(created)

- monitoring/mon-stack-kube-prometheus-kubernetes-system-kubelet(created)

- monitoring/mon-stack-kube-prometheus-node-exporter(created)

- monitoring/mon-stack-kube-prometheus-node-exporter.rules(created)

- monitoring/mon-stack-kube-prometheus-node-network(created)

- monitoring/mon-stack-kube-prometheus-node.rules(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-prometheus-prometheus-operator(created)

monitoring.coreos.com/v1/ServiceMonitor:

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-alertmanager(created)

- monitoring/mon-stack-kube-prometheus-apiserver(created)

- monitoring/mon-stack-kube-prometheus-coredns(created)

- monitoring/mon-stack-kube-prometheus-kubelet(created)

- monitoring/mon-stack-kube-prometheus-operator(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-state-metrics(created)

- monitoring/mon-stack-prometheus-node-exporter(created)

networking.k8s.io/v1/Ingress:

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

rbac.authorization.k8s.io/v1/ClusterRole:

- admin(skipped)

- cilium(skipped)

- cilium-operator(skipped)

- cluster-admin(skipped)

- cs-csi-node-cluster-role(skipped)

- edit(skipped)

- hubble-ui(skipped)

- kubeadm:get-nodes(skipped)

- mon-stack-grafana-clusterrole(created)

- mon-stack-kube-prometheus-operator(created)

- mon-stack-kube-prometheus-prometheus(created)

- mon-stack-kube-state-metrics(created)

- system:aggregate-to-admin(skipped)

- system:aggregate-to-edit(skipped)

- system:aggregate-to-view(skipped)

- system:auth-delegator(skipped)

- system:basic-user(skipped)

- system:certificates.k8s.io:certificatesigningrequests:nodeclient(skipped)

- system:certificates.k8s.io:certificatesigningrequests:selfnodeclient(skipped)

- system:certificates.k8s.io:kube-apiserver-client-approver(skipped)

- system:certificates.k8s.io:kube-apiserver-client-kubelet-approver(skipped)

- system:certificates.k8s.io:kubelet-serving-approver(skipped)

- system:certificates.k8s.io:legacy-unknown-approver(skipped)

- system:controller:attachdetach-controller(skipped)

- system:controller:certificate-controller(skipped)

- system:controller:clusterrole-aggregation-controller(skipped)

- system:controller:cronjob-controller(skipped)

- system:controller:daemon-set-controller(skipped)

- system:controller:deployment-controller(skipped)

- system:controller:disruption-controller(skipped)

- system:controller:endpoint-controller(skipped)

- system:controller:endpointslice-controller(skipped)

- system:controller:endpointslicemirroring-controller(skipped)

- system:controller:ephemeral-volume-controller(skipped)

- system:controller:expand-controller(skipped)

- system:controller:generic-garbage-collector(skipped)

- system:controller:horizontal-pod-autoscaler(skipped)

- system:controller:job-controller(skipped)

- system:controller:legacy-service-account-token-cleaner(skipped)

- system:controller:namespace-controller(skipped)

- system:controller:node-controller(skipped)

- system:controller:persistent-volume-binder(skipped)

- system:controller:pod-garbage-collector(skipped)

- system:controller:pv-protection-controller(skipped)

- system:controller:pvc-protection-controller(skipped)

- system:controller:replicaset-controller(skipped)

- system:controller:replication-controller(skipped)

- system:controller:resourcequota-controller(skipped)

- system:controller:root-ca-cert-publisher(skipped)

- system:controller:route-controller(skipped)

- system:controller:service-account-controller(skipped)

- system:controller:service-controller(skipped)

- system:controller:statefulset-controller(skipped)

- system:controller:ttl-after-finished-controller(skipped)

- system:controller:ttl-controller(skipped)

- system:coredns(skipped)

- system:discovery(skipped)

- system:heapster(skipped)

- system:kube-aggregator(skipped)

- system:kube-controller-manager(skipped)

- system:kube-dns(skipped)

- system:kube-scheduler(skipped)

- system:kubelet-api-admin(skipped)

- system:monitoring(skipped)

- system:node(skipped)

- system:node-bootstrapper(skipped)

- system:node-problem-detector(skipped)

- system:node-proxier(skipped)

- system:persistent-volume-provisioner(skipped)

- system:public-info-viewer(skipped)

- system:service-account-issuer-discovery(skipped)

- system:volume-scheduler(skipped)

- view(skipped)

rbac.authorization.k8s.io/v1/ClusterRoleBinding:

- cilium(skipped)

- cilium-operator(skipped)

- cluster-admin(skipped)

- cs-csi-node-cluster-role-binding(skipped)

- hubble-ui(skipped)

- kubeadm:cluster-admins(skipped)

- kubeadm:get-nodes(skipped)

- kubeadm:kubelet-bootstrap(skipped)

- kubeadm:node-autoapprove-bootstrap(skipped)

- kubeadm:node-autoapprove-certificate-rotation(skipped)

- kubeadm:node-proxier(skipped)

- mon-stack-grafana-clusterrolebinding(created)

- mon-stack-kube-prometheus-operator(created)

- mon-stack-kube-prometheus-prometheus(created)

- mon-stack-kube-state-metrics(created)

- system:basic-user(skipped)

- system:controller:attachdetach-controller(skipped)

- system:controller:certificate-controller(skipped)

- system:controller:clusterrole-aggregation-controller(skipped)

- system:controller:cronjob-controller(skipped)

- system:controller:daemon-set-controller(skipped)

- system:controller:deployment-controller(skipped)

- system:controller:disruption-controller(skipped)

- system:controller:endpoint-controller(skipped)

- system:controller:endpointslice-controller(skipped)

- system:controller:endpointslicemirroring-controller(skipped)

- system:controller:ephemeral-volume-controller(skipped)

- system:controller:expand-controller(skipped)

- system:controller:generic-garbage-collector(skipped)

- system:controller:horizontal-pod-autoscaler(skipped)

- system:controller:job-controller(skipped)

- system:controller:legacy-service-account-token-cleaner(skipped)

- system:controller:namespace-controller(skipped)

- system:controller:node-controller(skipped)

- system:controller:persistent-volume-binder(skipped)

- system:controller:pod-garbage-collector(skipped)

- system:controller:pv-protection-controller(skipped)

- system:controller:pvc-protection-controller(skipped)

- system:controller:replicaset-controller(skipped)

- system:controller:replication-controller(skipped)

- system:controller:resourcequota-controller(skipped)

- system:controller:root-ca-cert-publisher(skipped)

- system:controller:route-controller(skipped)

- system:controller:service-account-controller(skipped)

- system:controller:service-controller(skipped)

- system:controller:statefulset-controller(skipped)

- system:controller:ttl-after-finished-controller(skipped)

- system:controller:ttl-controller(skipped)

- system:coredns(skipped)

- system:discovery(skipped)

- system:konnectivity-server(skipped)

- system:kube-controller-manager(skipped)

- system:kube-dns(skipped)

- system:kube-scheduler(skipped)

- system:monitoring(skipped)

- system:node(skipped)

- system:node-proxier(skipped)

- system:public-info-viewer(skipped)

- system:service-account-issuer-discovery(skipped)

- system:volume-scheduler(skipped)

- velero(skipped)

rbac.authorization.k8s.io/v1/Role:

- kube-public/kubeadm:bootstrap-signer-clusterinfo(skipped)

- kube-public/system:controller:bootstrap-signer(skipped)

- kube-system/cilium-config-agent(skipped)

- kube-system/extension-apiserver-authentication-reader(skipped)

- kube-system/kube-proxy(skipped)

- kube-system/kubeadm:kubelet-config(skipped)

- kube-system/kubeadm:nodes-kubeadm-config(skipped)

- kube-system/system::leader-locking-kube-controller-manager(skipped)

- kube-system/system::leader-locking-kube-scheduler(skipped)

- kube-system/system:controller:bootstrap-signer(skipped)

- kube-system/system:controller:cloud-provider(skipped)

- kube-system/system:controller:token-cleaner(skipped)

- monitoring/mon-stack-grafana(created)

rbac.authorization.k8s.io/v1/RoleBinding:

- kube-public/kubeadm:bootstrap-signer-clusterinfo(skipped)

- kube-public/system:controller:bootstrap-signer(skipped)

- kube-system/cilium-config-agent(skipped)

- kube-system/kube-proxy(skipped)

- kube-system/kubeadm:kubelet-config(skipped)

- kube-system/kubeadm:nodes-kubeadm-config(skipped)

- kube-system/system::extension-apiserver-authentication-reader(skipped)

- kube-system/system::leader-locking-kube-controller-manager(skipped)

- kube-system/system::leader-locking-kube-scheduler(skipped)

- kube-system/system:controller:bootstrap-signer(skipped)

- kube-system/system:controller:cloud-provider(skipped)

- kube-system/system:controller:token-cleaner(skipped)

- monitoring/mon-stack-grafana(created)

scheduling.k8s.io/v1/PriorityClass:

- system-cluster-critical(skipped)

- system-node-critical(skipped)

storage.k8s.io/v1/CSIDriver:

- csi.cloudstack.apache.org(skipped)

storage.k8s.io/v1/StorageClass:

- cloudstack-custom(skipped)

v1/ConfigMap:

- default/kube-root-ca.crt(skipped)

- kube-node-lease/kube-root-ca.crt(skipped)

- kube-public/cluster-info(skipped)

- kube-public/kube-root-ca.crt(skipped)

- kube-system/cilium-config(skipped)

- kube-system/coredns(skipped)

- kube-system/extension-apiserver-authentication(skipped)

- kube-system/hubble-relay-config(skipped)

- kube-system/hubble-ui-nginx(skipped)

- kube-system/kube-apiserver-legacy-service-account-token-tracking(skipped)

- kube-system/kube-proxy(skipped)

- kube-system/kube-root-ca.crt(skipped)

- kube-system/kubeadm-config(skipped)

- kube-system/kubelet-config(skipped)

- monitoring/kube-root-ca.crt(failed)

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-grafana-config-dashboards(created)

- monitoring/mon-stack-kube-prometheus-alertmanager-overview(created)

- monitoring/mon-stack-kube-prometheus-apiserver(created)

- monitoring/mon-stack-kube-prometheus-cluster-total(created)

- monitoring/mon-stack-kube-prometheus-grafana-datasource(created)

- monitoring/mon-stack-kube-prometheus-grafana-overview(created)

- monitoring/mon-stack-kube-prometheus-k8s-coredns(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-cluster(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-multicluster(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-namespace(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-node(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-pod(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-workload(created)

- monitoring/mon-stack-kube-prometheus-k8s-resources-workloads-namespace(created)

- monitoring/mon-stack-kube-prometheus-kubelet(created)

- monitoring/mon-stack-kube-prometheus-namespace-by-pod(created)

- monitoring/mon-stack-kube-prometheus-namespace-by-workload(created)

- monitoring/mon-stack-kube-prometheus-node-cluster-rsrc-use(created)

- monitoring/mon-stack-kube-prometheus-node-rsrc-use(created)

- monitoring/mon-stack-kube-prometheus-nodes(created)

- monitoring/mon-stack-kube-prometheus-nodes-darwin(created)

- monitoring/mon-stack-kube-prometheus-persistentvolumesusage(created)

- monitoring/mon-stack-kube-prometheus-pod-total(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-prometheus-workload-total(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-rulefiles-0(created)

- velero/kube-root-ca.crt(skipped)

v1/Endpoints:

- default/kubernetes(skipped)

- kube-system/hubble-peer(skipped)

- kube-system/hubble-relay(skipped)

- kube-system/hubble-ui(skipped)

- kube-system/kube-dns(skipped)

- kube-system/mon-stack-kube-prometheus-coredns(created)

- kube-system/mon-stack-kube-prometheus-kubelet(skipped)

- monitoring/alertmanager-operated(created)

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-alertmanager(created)

- monitoring/mon-stack-kube-prometheus-operator(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-state-metrics(created)

- monitoring/mon-stack-prometheus-node-exporter(created)

- monitoring/prometheus-operated(created)

v1/Namespace:

- monitoring(created)

v1/PersistentVolume:

- pvc-167b0bc6-a3b1-4dff-a9a6-fae2204d939a(skipped)

v1/PersistentVolumeClaim:

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-db-prometheus-mon-stack-kube-prometheus-prometheus-0(created)

v1/Pod:

- kube-system/cilium-59rjg(failed)

- kube-system/cilium-kw86v(failed)

- kube-system/cilium-operator-6465748764-gbmrt(failed)

- kube-system/cilium-operator-6465748764-hngrd(failed)

- kube-system/cloudstack-csi-node-7zkrn(failed)

- kube-system/cloudstack-csi-node-crwmc(failed)

- kube-system/coredns-54ff9b9dd9-8qftm(failed)

- kube-system/coredns-54ff9b9dd9-r4wgq(failed)

- kube-system/hubble-relay-5446cbb587-9d8fb(failed)

- kube-system/hubble-ui-647f4487ff-s6kpj(failed)

- kube-system/konnectivity-agent-btbpc(failed)

- kube-system/konnectivity-agent-jsjcb(failed)

- kube-system/kube-proxy-nwgt9(failed)

- kube-system/kube-proxy-tcp67(failed)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-0(created)

- monitoring/mon-stack-grafana-684984b764-p6zpc(created)

- monitoring/mon-stack-kube-prometheus-operator-cb59fb64c-z99wc(created)

- monitoring/mon-stack-kube-state-metrics-869cf9756-rstfz(created)

- monitoring/mon-stack-prometheus-node-exporter-4wjcm(created)

- monitoring/mon-stack-prometheus-node-exporter-w7mj5(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-0(created)

- velero/node-agent-5lvs4(failed)

- velero/node-agent-n9v4r(failed)

- velero/velero-bfb7fb7dc-97rf4(failed)

v1/Secret:

- kube-system/bootstrap-token-9k59n2(skipped)

- kube-system/bootstrap-token-abcdef(skipped)

- kube-system/bootstrap-token-atkec9(skipped)

- kube-system/cilium-ca(skipped)

- kube-system/cs-csi-node-cloud-config(skipped)

- kube-system/hubble-relay-client-certs(skipped)

- kube-system/hubble-server-certs(skipped)

- kube-system/sh.helm.release.v1.cilium.v1(skipped)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager(created)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-generated(created)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-tls-assets-0(created)

- monitoring/alertmanager-mon-stack-kube-prometheus-alertmanager-web-config(created)

- monitoring/mon-stack-grafana(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-tls-assets-0(created)

- monitoring/prometheus-mon-stack-kube-prometheus-prometheus-web-config(created)

- monitoring/sh.helm.release.v1.mon-stack.v1(created)

- velero/cloud-credentials(skipped)

- velero/velero-repo-credentials(skipped)

v1/Service:

- default/kubernetes(failed)

- kube-system/hubble-peer(failed)

- kube-system/hubble-relay(failed)

- kube-system/hubble-ui(failed)

- kube-system/kube-dns(failed)

- kube-system/mon-stack-kube-prometheus-coredns(created)

- kube-system/mon-stack-kube-prometheus-kubelet(failed)

- monitoring/alertmanager-operated(created)

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-alertmanager(created)

- monitoring/mon-stack-kube-prometheus-operator(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-state-metrics(created)

- monitoring/mon-stack-prometheus-node-exporter(created)

- monitoring/prometheus-operated(created)

v1/ServiceAccount:

- default/default(skipped)

- kube-node-lease/default(skipped)

- kube-public/default(skipped)

- kube-system/attachdetach-controller(skipped)

- kube-system/bootstrap-signer(skipped)

- kube-system/certificate-controller(skipped)

- kube-system/cilium(skipped)

- kube-system/cilium-operator(skipped)

- kube-system/cloudstack-csi-node(skipped)

- kube-system/clusterrole-aggregation-controller(skipped)

- kube-system/coredns(skipped)

- kube-system/cronjob-controller(skipped)

- kube-system/daemon-set-controller(skipped)

- kube-system/default(skipped)

- kube-system/deployment-controller(skipped)

- kube-system/disruption-controller(skipped)

- kube-system/endpoint-controller(skipped)

- kube-system/endpointslice-controller(skipped)

- kube-system/endpointslicemirroring-controller(skipped)

- kube-system/ephemeral-volume-controller(skipped)

- kube-system/expand-controller(skipped)

- kube-system/generic-garbage-collector(skipped)

- kube-system/horizontal-pod-autoscaler(skipped)

- kube-system/hubble-relay(skipped)

- kube-system/hubble-ui(skipped)

- kube-system/job-controller(skipped)

- kube-system/konnectivity-agent(skipped)

- kube-system/kube-proxy(skipped)

- kube-system/legacy-service-account-token-cleaner(skipped)

- kube-system/namespace-controller(skipped)

- kube-system/node-controller(skipped)

- kube-system/persistent-volume-binder(skipped)

- kube-system/pod-garbage-collector(skipped)

- kube-system/pv-protection-controller(skipped)

- kube-system/pvc-protection-controller(skipped)

- kube-system/replicaset-controller(skipped)

- kube-system/replication-controller(skipped)

- kube-system/resourcequota-controller(skipped)

- kube-system/root-ca-cert-publisher(skipped)

- kube-system/service-account-controller(skipped)

- kube-system/statefulset-controller(skipped)

- kube-system/token-cleaner(skipped)

- kube-system/ttl-after-finished-controller(skipped)

- kube-system/ttl-controller(skipped)

- monitoring/default(skipped)

- monitoring/mon-stack-grafana(created)

- monitoring/mon-stack-kube-prometheus-alertmanager(created)

- monitoring/mon-stack-kube-prometheus-operator(created)

- monitoring/mon-stack-kube-prometheus-prometheus(created)

- monitoring/mon-stack-kube-state-metrics(created)

- monitoring/mon-stack-prometheus-node-exporter(created)

- velero/default(skipped)

- velero/velero(skipped)

velero.io/v1/BackupStorageLocation:

- velero/default(failed)

velero.io/v1/ServerStatusRequest:

- velero/velero-cli-d7s64(created)

velero.io/v1/VolumeSnapshotLocation:

- velero/default(skipped)The restore process will only restore missing resources. There is a way to force the complete resources but is beyond the scope of this document.

Troubleshooting Tips

- When modifying the storage bucket, the

Backuprepositoriesresources will need to be deleted on the cluster before running new backups. - Both the backup and the restore command has a debug parameter

velero debug --backup <BACKUP_NAME> --restore <RESTORE_NAME>This command will create a compressed bundle with all the logs and describe the backup and the restore needed for diagnosis.

Invoking debug with only ‘--backup <BACKUP_NAME> or the --restore <RESTORE_NAME>‘ will allow us to get only the information needed.

Further reading

See here the Velero official documentation.