Set up an NFS Mount on Linux

Prerequisites

We need to install a package called nfs-common (Ubuntu, Debian) or nfs-utils (RHEL, CentOS) on the client host, which provides NFS client functionality.

Refresh the local package index before installation (Ubuntu, Debian) to ensure that you have up-to-date information:

For Ubuntu, Debian

sudo apt update

sudo apt install nfs-commonFor RHEL, CentOS:

dnf install nfs-utilsTo make the remote shares available on the client, we need to mount the directories on the host that we want to share to empty directories on the client.

For example:

sudo mkdir -p /mnt/nfsNow that we have a location to put the remote share, we can mount the shares using the IP address of our NetApp nfs server:

sudo mount host_ip:/vol0/vol1 /mnt/nfsTesting NFS Access

- First, write a test file to the

/mnt/nfsshare:

sudo touch /mnt/nfs/general.test- Then, check the file ownership:

ls -l /mnt/nfs/general.test- Output:

-rw-r--r-- 1 nobody nogroup 0 Aug 1 13:31 /mnt/nfs/general.testMounting NFS Directories at Boot

We can automatically mount the remote NFS shares at boot by adding them to /etc/fstab file on the client.

- Open this file with root privileges in your text editor:

sudo nano /etc/fstab- At the bottom of the file, add a line for each of our shares. They will look like this:

netapp_ip:/vol0/vol1 /mnt/nfs nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0Set up an NFS Mount on Windows

Step 1 – Install the ‘Client for NFS’

Install the ‘Client for NFS’ feature on your Windows machine to set up the NFS client for Windows:

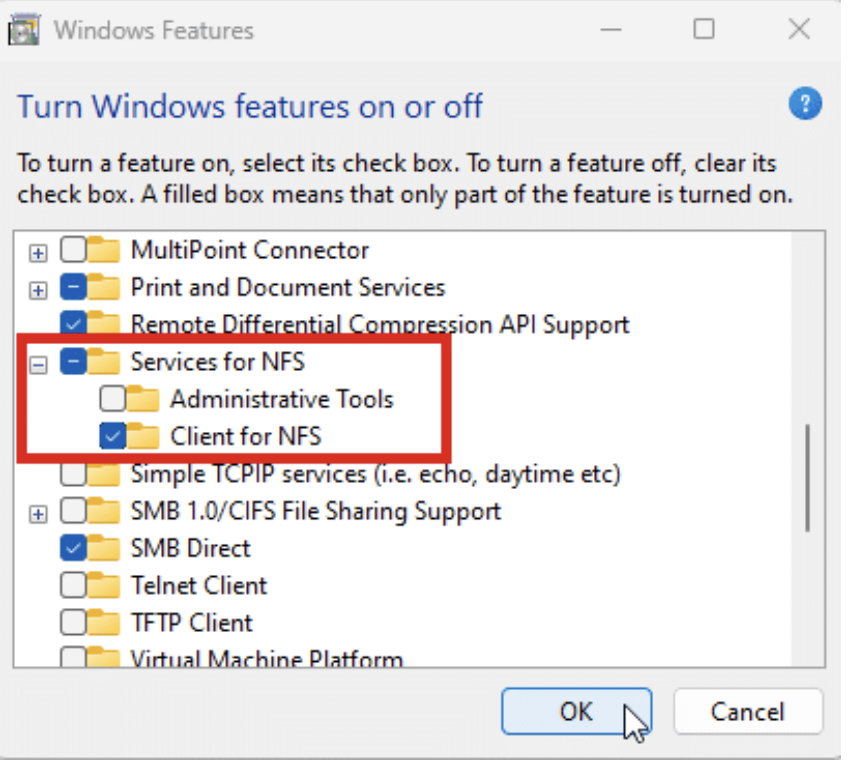

- In Windows 10 or Windows 11, open Control Panel and go to Windows Features.

- Expand Services for NFS and check the box next to Client for NFS, as shown below:

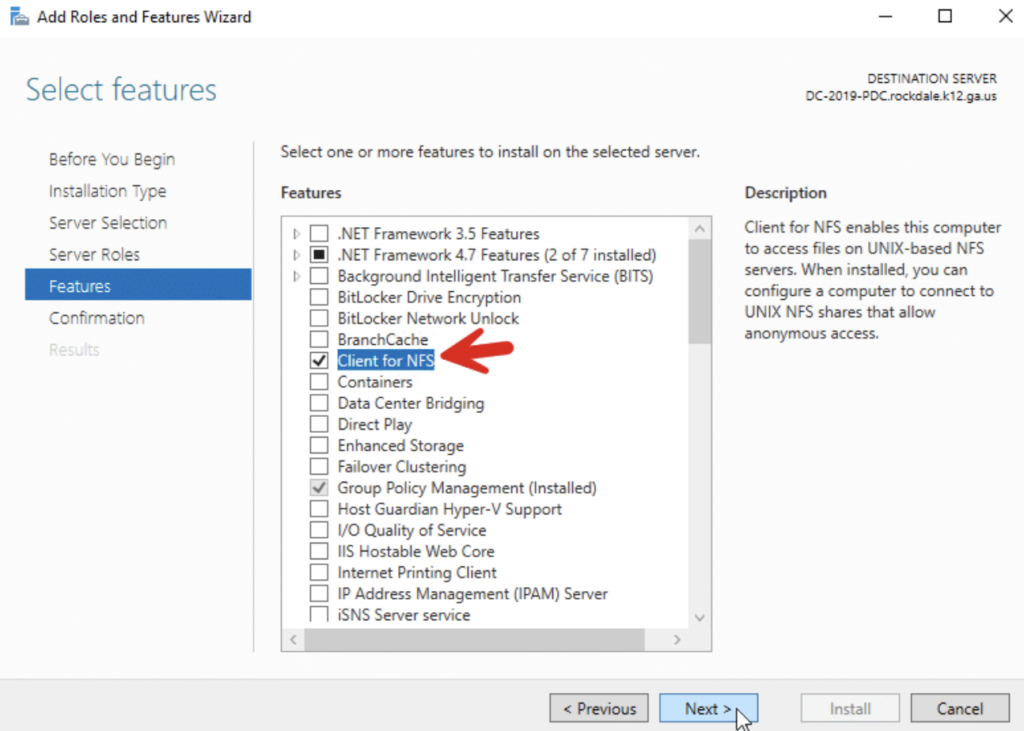

- For Windows Server 2012 and above, run the Roles and Features Wizard and select Client for NFS, as shown below:

Step 2 – Verify the client and connectivity

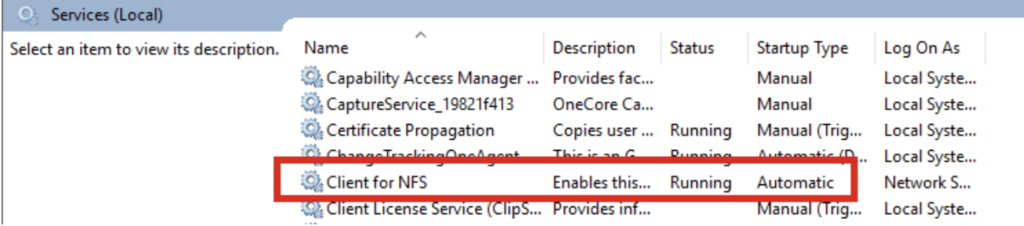

- Verify that the NFS Client service is running on your machine, as shown below:

Step 3 – Perform identity mapping

- To enable Windows users to authenticate to a Unix server providing NFS exports, we need to map Windows users to the Unix user identifiers (UIDs), and group identifiers (GIDs) used by Unix-like operating systems.

- This mapping allows the Unix server to determine which user requested the NFS export.

- Identity mapping can be done using any of the following methods:

- Active Directory (if integrated with the NFS environment) Local user mapping files

- Windows Registry settings

- The first option is preferred for security and scalability reasons.

Method A (Preferred): Perform Identity Mapping in Active Directory (AD)

If both the Unix NFS server and Windows NFS client are joined to the same Active Directory domain, then we can use identity mapping in Active Directory. This is the preferred method whenever possible.

By default, a NFS client will not look up identity mapping in Active Directory. However, we can change that by running the following command in an elevated PowerShell session on the NFS client:

Set-NfsMappingStore -EnableADLookup $True -ADDomainName “yourdomain.com”- You can add these LDAP options to specify your domain controllers:

Set-NfsMappingStore -LdapServers “dc1.yourdomain.com”,” dc2.yourdomain.com”- Restart the NFS client service.

- Next, we need to configure our identity mapping. We can do that in Active Directory Users and Computers as follows:

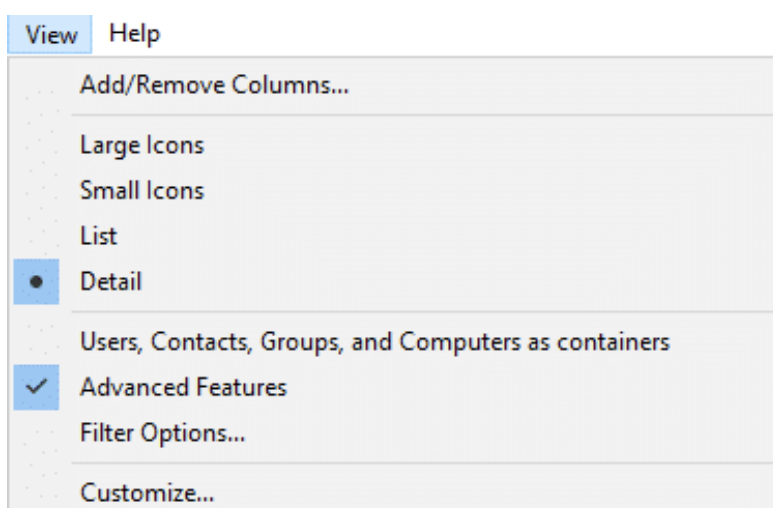

- Enable Advanced Features under the View drop-down, as shown below.

- Right-click on the object you want to view and select Properties. In the Attribute Editor tab, select the uidNumber or gidNumber attribute and click Edit.

- Enter a value, and click OK to save your changes.

- Alternatively, you can use the following PowerShell command:

Set-ADUser -Identity <UserPrincipalName> -Add @{uidNumber=” <user_unix_uid>”;gidNumber=”<user_unix_gid>”}- Replace the following:

UserPrincipalName — The user to modify (e.g., user@domain.com) @{ ... } — A hashtable containing the new attributes to add uidNumber — The Unix user ID (UID) for the user

gidNumber — The Unix group ID (GID) for the user’s primary groupTo perform this task automatically, create a CSV file containing user data and loop through it.

Method B: Perform Identity Mapping using Local Configuration Files

Using local user mapping files is a straightforward approach to establishing correspondence between Windows users and Unix UIDs/GIDs without relying on Active Directory. This method uses two main configuration files:

passwd — Maps Windows users to Unix UIDs

group — Maps Windows groups to Unix GIDs

Both files are typically located in the C:\Windows\system32\drivers\etc\ directory. They follow a specific format, with each line representing a user or group mapping.

When a Windows user attempts to access an NFS resource, the NFS service consults these files to look up the user’s Windows account name and retrieve the corresponding UID and GID, which are then used for NFS operations.

This method is simple to set up and manage in small environments and is particularly useful for standalone servers or workgroups.

However, because it requires manual maintenance of mapping files, it doesn’t scale well for large environments.

In addition, while it offers a solution for environments where Active Directory integration is not feasible, it’s important to consider security implications and regularly audit the mappings to ensure they remain accurate and secure.

Method C: Perform Identity Mapping using Windows Registry Settings

This method involves setting registry values to specify a default UID and GID that the Windows NFS client will use when accessing NFS shares.

It’s particularly useful when you want all NFS access from the Windows client to appear as a specific Unix user, regardless of the Windows user account.

To implement this mapping, use the following PowerShell commands:

New-ItemProperty “HKLM:\SOFTWARE\Microsoft\ClientForNFS\CurrentVersion\Default” - Name AnonymousUID -Value <unix_export_owner_uid> -PropertyType “DWord”

New-ItemProperty “HKLM:\SOFTWARE\Microsoft\ClientForNFS\CurrentVersion\Default” - Name AnonymousGID -Value <unix_export_owner_gid> -PropertyType “DWord”

For these and other reasons, this method is considered an insecure approach and is not recommended.

You need to reboot to have the new settings take effect. This mapping will apply to all NFS mounts made by the Windows client.

After performing identity mapping, you can mount NFS in Windows using the command prompt.

Warning

Note that this method applies the same UID/GID to all NFS access from the Windows client, which may not provide sufficient access control in multi-user environments.

In addition, modifying the registry requires administrative privileges, which could be a security concern.

Step 4 – Mount the NFS Share Windows

- Mounting in NFS means attaching a remote file system (exported by an NFS server) to a directory on the local file system of a client machine.

- When a client mounts an NFS share, it connects to the remote file system and makes it appear as if the NFS share is part of the local directory structure.

- To mount NFS on Windows, map it to an available drive letter using the command prompt, as follows:

- Z — The drive letter to which the NFS share will be mounted on your Windows computer.

mount \\<nfs_server_ip_address>\<path to nfs export> Z: Replace the following:

<nfs_server_ip_address> — The IP address of your NFS server <path to nfs export> — The export path on the server- You can add additional options; for instance, mount

-o anonspecifies that the connection should be made anonymously, and mount-o nolockoverrides the default file locking used by NFS. - After running the mount or mount o command, check whether the NFS share was successfully mounted by running the

net usecommand to display all mapped network drives.

Mounting an iSCSI volume on Linux

Step 1 – Check for system updates

- Make sure that your system is updated and includes the following packages. Use the following commands to install the packages.

For Ubuntu, Debian

sudo apt Install open-iscsi.

sudo apt install open-iscsi

sudo apt install multipath-toolsFor RHEL, CentOS:

sudo yum install iscsi-initiator-utils

sudo yum install device-mapper-multipath- When the packages are installed, it creates the following two files.

/etc/iscsi/iscsid.conf

/etc/iscsi/initiatorname.iscsiStep 2 – Set up the multipath

- After you install the multi-path utility, create an empty configuration file that is called.

/etc/multipath.conf- Modify the default values.

defaults {

user_friendly_names no

max_fds max

flush_on_last_del yes

queue_without_daemon no

dev_loss_tmo infinity

fast_io_fail_tmo 5

}

# All data in the following section must be specific to your system.

blacklist {

wwid "SAdaptec*"

devnode "^hd[a-z]"

devnode "^(ram|raw|loop|fd|md|dm-|sr|scd|st)[0-9]*"

devnode "^cciss.*"

}

devices {

device {

vendor "NETAPP"

product "LUN"

path_grouping_policy group_by_prio

features "3 queue_if_no_path pg_init_retries 50"

prio "alua"

path_checker tur

failback immediate

path_selector "round-robin 0"

hardware_handler "1 alua"

rr_weight uniform

rr_min_io 128

}

}- The initial defaults section of the configuration file configures your system so that the names of the multipath devices are of the form

/dev/mapper/mpathn, where mpathn is the WWID number of the device.

- Save the configuration file and exit the editor, if necessary.

- Start the multi-path service.

service multipath-tools startStep 3 – Update /etc/iscsi/initiatorname.iscsi file

- Update the following file with the correct IQN

/etc/iscsi/initiatorname.iscsi

InitiatorName=<IQN>Step 4 – Configure credentials

- Edit the following settings in /etc/iscsi/iscsid.conf by using the username and password. Use uppercase for CHAP names.

node.session.auth.authmethod = CHAP

node.session.auth.username = <Username-value-from-Portal>

node.session.auth.password = <Password-value-from-Portal>

discovery.sendtargets.auth.authmethod = CHAP

discovery.sendtargets.auth.username = <Username-value-from-Portal>

discovery.sendtargets.auth.password = <Password-value-from-Portal>

Restart the iscsi service for the changes to take effect.

systemctl restart iscsid.serviceStep 5 – Discover the storage device and login

The iscsiadm utility is a command-line tool that is used for the discovery and login to iSCSI targets, plus access and management of the open-iscsi database. For more information, see the iscsiadm(8) man page. In this step, discover the device by using the Target IP address that was obtained from Leaseweb.

- Run the discovery against the iSCSI array.

iscsiadm -m discovery -t sendtargets -p <ip-value-from-leaseweb-provided-ip>- If the IP information and access details are displayed, then the discovery is successful.

- Configure automatic login.

sudo iscsiadm -m node --op=update -n node.conn[0].startup -v automatic

sudo iscsiadm -m node --op=update -n node.startup -v automatic- Enable the necessary services.

systemctl enable open-iscsi

systemctl enable iscsid- Restart the iscsid service.

systemctl restart iscsid.service- Login to the iSCSI array.

sudo iscsiadm -m node --loginall=automaticStep 6 – Verifying configuration

- Validate that the iSCSI session is established.

iscsiadm -m session -o show- Validate that multiple paths exist.

multipath -ll- This command reports the paths. If it is configured correctly, then each volume has a single group, with a number of paths equal to the number of iSCSI sessions. It’s possible to attach Block Storage for Classic with only a single path, but it is important that connections are established on both paths to make sure that no disruption of service occurs.

- After the volume is mounted and accessible on the host, you can create a file system as you normally do with any block device.

sudo multipath -ll

mpathb (360014051f65c6cb11b74541b703ce1d4) dm-1 LIO-ORG,TCMU device

size=1.0G features='0' hwhandler='0' wp=rw

|-+- policy='service-time 0' prio=1 status=active

| `- 7:0:0:2 sdh 8:112 active ready running

`-+- policy='service-time 0' prio=1 status=enabled

`- 8:0:0:2 sdg 8:96 active ready running

mpatha (36001405b816e24fcab64fb88332a3fc9) dm-0 LIO-ORG,TCMU device

size=1.0G features='0' hwhandler='0' wp=rw

|-+- policy='service-time 0' prio=1 status=active

| `- 7:0:0:1 sdj 8:144 active ready running

`-+- policy='service-time 0' prio=1 status=enabled

`- 8:0:0:1 sdi 8:128 active ready runningSet up iSCSI on Windows

- Add Multipath I/O as a feature in Windows 2008. Navigate to Server Manager in the Windows 2008 server, access the Features section and add the Multipath I/O feature.

- Windows Server 2019 and above it is necessary to enable MPIO for iSCSI.

- Click Server Manager > Dashboard > Tools > MPIO, MPIO Properties dialog box, and select the Discover Multi-Paths tab. Select Add support for iSCSI

- Configure the iSCSI Initiator on the client.

- Note: You will get a prompt when you start the iSCSI Initiator for the first time, click Yes.

- Click Start>Run. Type the iscsicpl command to open the ISCSI

software initiator configuration window. - Click Discover Portal. Add both the IP addresses one after the other, as shown below:

- Click Advanced… and configure the information in the General tab. Make sure the Local Adapter and the Initiator IP are selected appropriately.

- Configure the MPIO. Proceed to Administrative Tools to start MPIO.

- Select the Add support for iSCSI devices option

- Click Add to get a prompt asking you to restart the computer.

- Click Yes to Reboot the machine.

- Note: The Add support for iSCSI devices option is unchecked by default. It is necessary to add a virtual disk to the iSCSI Target and then make at least one iSCSI connection before you have the option to enable MPIO for iSCSI.

- After the reboot, the MSFT2005iSCSIBusType_0x9 will appear in the MPIO devices.

- Click Start>Run. Type the iscsicpl command to open the ISCSI software initiator configuration window.

- Click on the Targets tab. You will find the target status is Inactive.

- Click Connect. Select Enable multi-path.

- Click Advanced…in the General tab.

- Select the IP address for the first path from the list in the Target

- portal field.

- It is necessary to connect using the second path as well. Hence it is necessary to repeat Steps 9 and 10 above, this time selecting the IP address for the second path in the Target portal. Again, make sure the values of the ‘Local adapter’ and ‘Initiator IP’ fields remain at ‘Default’.

- Go back to the iSCSI Initiator’s main window and go to the Targets tab, select the target and click Properties.

- Click Devices and click the MPIO button to configure the load balancing policy. All load balance policies are supported. Round robin with a subset is generally recommended and is the default for arrays with ALUA enabled. Round robin is the default for active arrays that do not support ALUA and is not recommended because it will load balance with non-optimized paths.

- To view the advanced path details, click on a path and click the Details button to view the information.